The NVIDIA GeForce RTX 5090, released last year as a flagship GPU, typically comes with 32GB of GDDR7 VRAM. Recently, reports surfaced about a custom 128GB version from Chinese factories, priced at a staggering $13,200 and tailored for AI workloads. This has sparked intense debate, but its feasibility is highly questionable from a technical perspective. Below, I analyze the practicality of this modified GPU, covering memory specifications, hardware challenges, and potential issues, formatted as an article suitable for your website.

Technical Foundation and Memory Configuration

The RTX 5090 features a 512-bit memory bus, similar to the RTX 6000 Pro, supporting up to 32 memory modules (16-bit per module). In clamshell mode, a dual-sided PCB can accommodate 32 modules:

- Current GDDR7 memory modules, supplied by Samsung and Micron, are available in 16Gb (2GB) or 24Gb (3GB) capacities.

- Using 3GB modules, a dual-sided configuration achieves 96GB of VRAM (32 modules × 3GB).

- To reach 128GB, 32Gb (4GB) modules are required. However, as of 2025, neither Samsung nor Micron has publicly released such high-density chips. Samsung’s 24Gb GDDR7, announced in late 2024 with speeds up to 42.5Gbps, is the highest capacity available, with no mention of 32Gb variants. Micron’s GDDR7 roadmap for early 2025 also focuses on 16Gb and 24Gb.

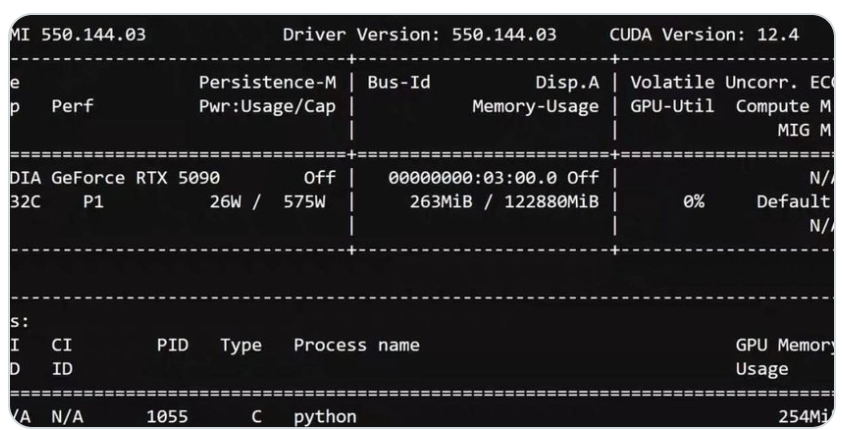

The report claims the 128GB version uses GDDR7, but a leaked screenshot references “GDDR7X”—a non-existent specification, raising doubts. Achieving 128GB likely requires a custom PCB, components transplanted from other GPUs (“donor cards”), and modified firmware or BIOS to bypass NVIDIA’s restrictions.

Implementation Challenges and Potential Risks

While upgrading to 128GB is theoretically possible, several obstacles make it impractical:

- Memory Availability: There’s no public evidence of 4GB GDDR7 modules in production. Speculation about prototype chips or factory-exclusive channels exists, but these are unreliable for commercial use. Previous modifications, like the 48GB RTX 4090, relied on swapping 1GB for 2GB modules, but the 5090’s case demands more complex engineering.

- Compatibility and Stability: The screenshot shows CUDA 12.4 support, which may not align with the 5090’s standard drivers. Modified cards lack official warranties and risk short-circuiting, overheating, or instability. Community feedback highlights that similar 4090 modifications often fail due to poor PCB components.

- Market and Regulatory Factors: This card targets the Chinese market to circumvent U.S. export bans, but the RTX 5090 itself is restricted there, relying on gray-market channels. The high price (base card ~$2,200, memory ~$1,000, plus $10,000 markup) reflects small-batch production costs and risks, not intrinsic value.

The report is considered a potential hoax, based solely on an unverified NVIDIA-smi screenshot from an unknown source, lacking physical photos or independent validation. In contrast, the official RTX 6000 Pro Blackwell offers 96GB VRAM for ~$8,000, with proven reliability.

Community Perspectives and Alternatives

Discussions label the 128GB version as “likely fake” or “not ready for mass production” due to memory density limits and lack of evidence. Alternatives like multi-card setups (e.g., four last-gen consumer GPUs) or professional cards like the A100 80GB are suggested as more cost-effective and stable. AMD’s unified memory solutions, such as the Ryzen AI 395+, are also noted as affordable options.

Conclusion: Low Feasibility, Proceed with Caution

The RTX 5090 128GB variant is theoretically feasible but hinges on unconfirmed 4GB GDDR7 modules and complex modifications. Current evidence suggests it’s more speculative hype or a potential scam. If you’re focused on AI workloads, consider reliable options like the RTX 6000 Pro (96GB) or wait for the GDDR7 ecosystem to mature. Should Samsung or Micron release higher-density chips, this configuration could become viable, but for now, skepticism is warranted.